What's become clear over the past few years is that AI has rapidly changed how students learn, and it's only poised to continue doing so in 2026. Because of its ability to quickly explain, summarize, clarify, and model problem-solving, AI has become a sort of academic safety net for millions — a tool that makes homework faster and more accessible. But this ease brings an important consideration: is AI helping students think better, or is it simply allowing them to cut corners?

The answer isn't as simple as "AI is good" or "AI is bad." Rather, it depends on how students use the technology; used well, AI can support deep learning and self-confidence. Used poorly, however, it can become a shortcut that undermines the very cognitive skills students need to thrive. This is where cognitive offloading comes into play, a phenomenon by which learners rely on technology such as AI – rather than their own mental abilities – to complete challenging tasks. Cognitive offloading is not new; it's something we've written on before, and it's a primary worry behind the increased usage of AI in academic settings.

Productive Struggle: a Way to Combat Cognitive Offloading

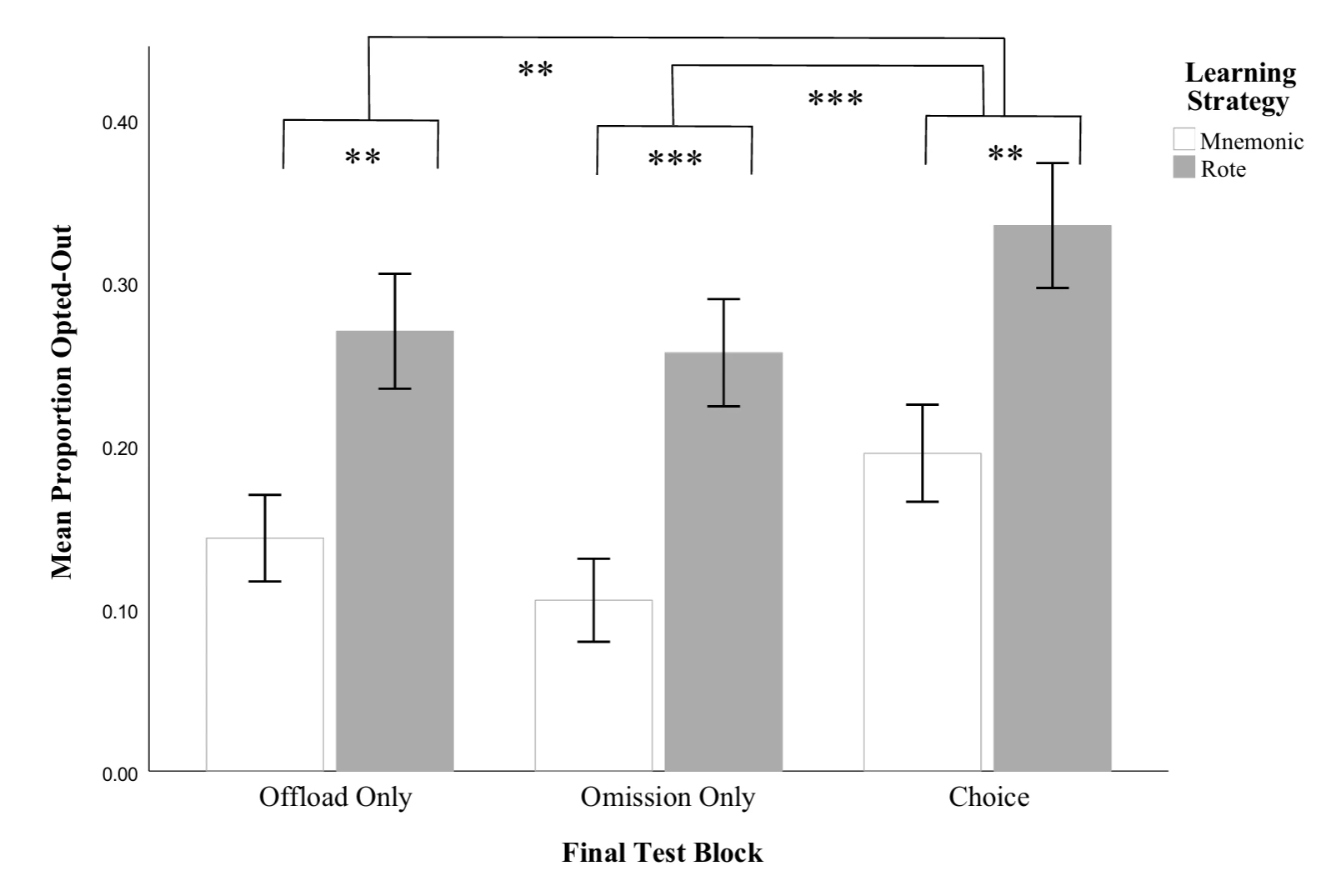

Cognitive offloading was the subject of a study published in 2025, in which scientists explored how different learning styles influence decisions to rely on external aids during memory retrieval tasks. Participants in a learning experience were trained using either mnemonic (associative) strategies or rote repetition strategies, before being given the opportunity to either attempt to recall information or opt out by offloading the task. The researchers found that those who used mnemonic strategies opted out (offloaded) less often and spent more time engaged with the task before choosing to offload compared with those using more mechanical rote learning.

Results are illustrated in the figure below.

This suggests that richer, more connected learning approaches encourage deeper cognitive engagement, making learners less likely to rely on external tools for thinking. In other words, when learners form stronger internal associations, they persist longer, which highlights the importance of productive struggles over shortcuts in this era of AI in education.

With that in mind, let's cover 5 common academic shortcuts students often pursue with the help of AI – and the effective countermeasures (in the form of productive struggles) that learners can take instead.

1. Shortcut: Asking for Complete Answers

This one is pretty straightforward. Many time-pressed students have gone to ChatGPT with a request along the lines of, "Write this essay for me," or, "Tell me the answer to this problem."

The pitfall is equally straightforward; asking for answers gives the student a product without them ever having to engage with the underlying reasoning. This robs them of opportunities to apply reasoning, practice problem-solving, and develop conceptual understanding.

Instead: Break the problem into manageable parts and solve piece-by-piece. Use AI to help shore up weaknesses rather than having it do an entire problem for you.

2. Shortcut: Using AI to Summarize Without Reflection

Here's another fan-favorite AI command, and it's just about as dangerous as the one above. By relying on AI to generate instant summaries, students risk shortcutting their comprehension. Without first trying to interpret the content they're supposed to work through, learners lose the opportunity to actually internalize insights.

Instead: Take the time to develop a draft summary yourself, then ask AI to fill in the blanks or generate its own piece for comparison. This way, you undertake reflection of your own while allowing AI to supplement any knowledge that may be missing.

3. Shortcut: Letting AI Edit Instead of Evaluate

Like having AI summarize content for you, it's pretty easy to use LLMs uncritically as a tool. A classic example is plugging your own writing into ChatGPT with the command, "Make my writing better." The LLM won't hesitate to generate an edited version, but whether or not such output is better may not be easily apparent.

The thing is, AI editing can mask underlying gaps in writing, such as flaws in logic or reasoning. Sure, your writing may look better after ChatGPT removes any glaring punctuation issues, but without addressing fundamental flaws, your writing's improvements will be limited to the surface level.

Instead: Have AI first evaluate your writing for any unclear logic or questionable structure. Use AI as a thought partner to give you ideas worth considering before undertaking revisions of your own.

4. Shortcut: Practicing With Solutions Attached

Even students who wish to use AI for legitimate practice may still fall prey to shortcuts if they request problems with solutions. After all, it takes a lot of willpower to look away from the answer when it's staring you in the face, and it's tempting to take a peek, even before the work is finished.

Instead: First request and attempt practice problems, then seek verification. Spend time thinking and grappling with the ideas in your head, allowing yourself to experience the exact kind of growth practice problems are meant to enable.

5. Shortcut: Accepting AI Output Uncritically

Last, we have a shortcut that is especially difficult to guard against because it requires a lot of patience and wisdom on behalf of the student. That pitfall involves simply accepting an AI's output as correct without further consideration. Unfortunately, doing so diminishes critical thinking and surrenders student agency.

Instead: Be unafraid to ask follow-up questions such as "What assumptions might have been made here?" and "Are there other solutions I may not be considering?" This opens the opportunity for more nuanced (and potentially complete) answers – and your critical thinking skills (and grades) will thank you.

What "Productive Struggle" Means for Grassroot

Educational research has consistently shown that effortful cognitive engagement — asking difficult questions, wrestling with complexity, and persisting despite confusion — is not a barrier to learning; it's a mechanism of learning itself. It is only by struggling productively that students can:

- Form stronger mental models

- Transfer knowledge to new contexts

- Develop confidence in their own thinking

AI shortcuts are appealing in the time they save, but this comes at a cost of dependency, whereby thought becomes something students expect from a tool, not something they practice themselves. This is why at Grassroot Academy, our tutors encourage productive engagement rather than passive consumption in learning. We guard against each of these AI-driven shortcuts – and others – to provide the best learning experience possible.

That means:

- Promoting guidance instead of spoonfeeding

- Encouraging reflection rather than simple summarization

- Applying AI as a thought partner, not an expert with unilateral authority

- Providing problems that are meant to be practiced, not memorized

- Sharing output that encourages dialogue, not blind acceptance

AI can be an extraordinary learning companion — but only when it enhances thinking, not replaces it. The difference between shortcuts and productive struggles is the difference between "done" and mastered.

And in an ecosystem of many technically advanced study tools, it's what gives students at Grassroot the edge they need to thrive.

Ready to learn with AI that builds thinking, not bypasses it? Explore Grassroot Academy and discover what productive struggle feels like with the right support. Start learning →

Source

Donet JR, Marshall PH, Serra MJ. The effect of learning strategies on offloading decisions in response recall. Memory & Cognition. 2025 Jul 11. doi: 10.3758/s13421-025-01750-9. Epub ahead of print. PMID: 40643828.